Hoy día, los chatbots de IA como ChatGPT tienen la capacidad de rastrear y utilizar el contenido de tu sitio web sin permiso previo.

Esta práctica, conocida como “scraping”, puede ser una preocupación para muchos propietarios de sitios web que desean proteger su contenido original y exclusivo.

La buena noticia es que existen maneras de impedir que estas herramientas de IA accedan a tu sitio web.

Una de las estrategias más efectivas para lograrlo es mediante la configuración del archivo robots.txt de tu sitio. Este archivo actúa como un guardián, dictando qué bots pueden interactuar con tu sitio y en qué medida.

En este artículo, te mostraremos qué tipos de bots existen y cómo puedes utilizar el archivo robots.txt para bloquear específicamente los bots de IA como ChatGPT, además de otros bots comunes en el panorama digital.

También exploraremos los pros y los contras de esta decisión.

Ayudándote a entender mejor cómo esta acción puede influir en la visibilidad de tu sitio, en tu SEO y, lo más importante, en la protección de tu contenido.

Cuáles son los principales Bots IA que acceden a tu sitio web

Prácticamente, todas las compañías con grandes modelos de lenguaje tienen sus propios bots para peinar la web y recopilar información.

A continuación tienes un listado con los más populares:

GPTBot: qué es y qué funciones tiene

GPTBot es un rastreador web desarrollado por OpenAI.

Este bot tiene como función principal navegar por la web y recopilar información de sitios web, la cual puede ser utilizada para mejorar futuros modelos de inteligencia artificial.

GPTBot identifica las páginas a través de un agente de usuario específico, asegurándose de no acceder a contenido protegido por paywalls o que contenga información personal identificable.

ChatGPT-User: qué es y qué funciones tiene

Por otro lado, ChatGPT-User es otro agente de usuario de OpenAI, utilizado por los complementos en ChatGPT.

A diferencia de GPTBot, ChatGPT-User no rastrea la web de manera automática.

Al contrario, usa para realizar acciones directas solicitadas por los usuarios de ChatGPT.

Por tanto, recopila información de páginas web para responder consultas en tiempo real hechas por usuarios a través de ChatGPT.

Qué diferencias hay entre GPTBot y ChatGPT-User

Las principales diferencias entre GPTBot y ChatGPT-User radican en su propósito y método de operación:

GPTBot está diseñado para rastrear y recopilar datos de manera extensiva y automática, con el objetivo de alimentar y mejorar modelos de IA.

Muy similar al funcionamiento de los rastreadores tradicionales de los motores de búsqueda.

En cambio, ChatGPT-User, se activa para buscar y obtener información con la que dar respuesta a consultas de usuarios en tiempo rea. Sin realizar un rastreo automático extensivo.

Anthropic-ai: qué es y qué funciones tiene

Anthropic-ai es un rastreador web operado por Anthropic.

Está enfocado en descargar datos para entrenar modelos de lenguaje de gran escala (LLMs), como los que potencian a Claude.

Su tarea principal es recopilar contenido web, funcionando como un “AI Data Scraper”.

Es cierto que los detalles específicos sobre cómo selecciona los sitios a rastrear son generalmente poco claros.

Google Extended: qué es y qué funciones tiene

Google-Extended es un rastreador web operado por Google, utilizado principalmente para descargar contenido de entrenamiento para productos de IA como Bard y las API generativas de Vertex AI.

Otros rastreadores de Inteligencia Artificial: Cohere-ai

Cohere-ai es un bot operado por Cohere, utilizado principalmente en sus productos de chat de IA.

Este bot se activa en respuesta a las indicaciones de los usuarios cuando es necesario recuperar contenido de internet.

A diferencia de los rastreadores web tradicionales, cohere-ai no navega automáticamente por la web, sino que realiza visitas específicas a sitios web basadas en solicitudes individuales de los usuarios.

Cómo bloquear los AI Bots para que no usen mi contenido

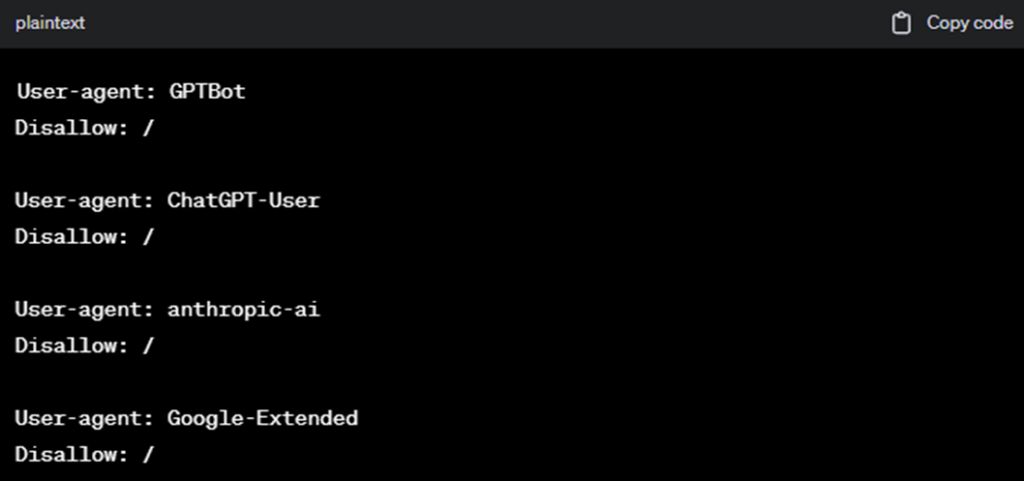

Para bloquear el acceso de estos bots a tu sitio web, puedes utilizar el archivo robots.txt.

Es la herramienta estándar de todo webmaster para controlar el acceso de los rastreadores.

Luce algo así:

Y se inserta en la raíz del dominio:

Tudomino.com/robots.txt

A continuación te explicamos el código que debes poner para bloquear cada IA Bot:

Cómo bloquear los bots de OpenAI

Bloquear GPTBot:

Añade las siguientes líneas a tu archivo robots.txt:

User-agent: GPTBot

Disallow: /

Bloquear ChatGPT-User:

De manera similar, para impedir el acceso de ChatGPT-User, agrega:

User-agent: ChatGPT-User

Disallow: /

Cómo puedo bloquear los bots de Anthropic / Claude

Para bloquear el acceso de anthropic-ai a tu sitio web, debes modificar el archivo robots.txt en la raíz de tu dominio con las siguientes líneas:

User-agent: GPTBot

User-agent: anthropic-ai

Disallow: /

Cómo puedo bloquear los bots de Google Bard / Vertex AI

Para controlar o bloquear el acceso de Google-Extended a tu sitio web, puedes configurar el archivo robots.txt incluyendo las siguientes líneas:

User-agent: Google-Extended

Disallow: /

¿Cómo de efectivo usar Robots.txt para bloquear a la IA?

Bloquear bots de IA mediante el archivo robots.txt es el método más efectivo que tenemos actualmente, pero no es del todo confiable.

El primer problema es que necesitas especificar cada bot que quieres bloquear, pero ¿quién puede llevar la cuenta de todos los bots de IA que salen al mercado?

El siguiente inconveniente es que las órdenes en tu archivo robots.txt son instrucciones no obligatorias. Mientras que bots como Common Crawl y ChatGPT respetan estas órdenes, muchos otros no lo hacen.

Otro gran, pero es que sólo puedes bloquear a los bots de IA para evitar rastreos futuros.

No puedes eliminar datos de rastreos anteriores ni enviar solicitudes a compañías como OpenAI para borrar todos tus datos.

¿Qué webs están bloqueando los bots IA? Algunos ejemplos:

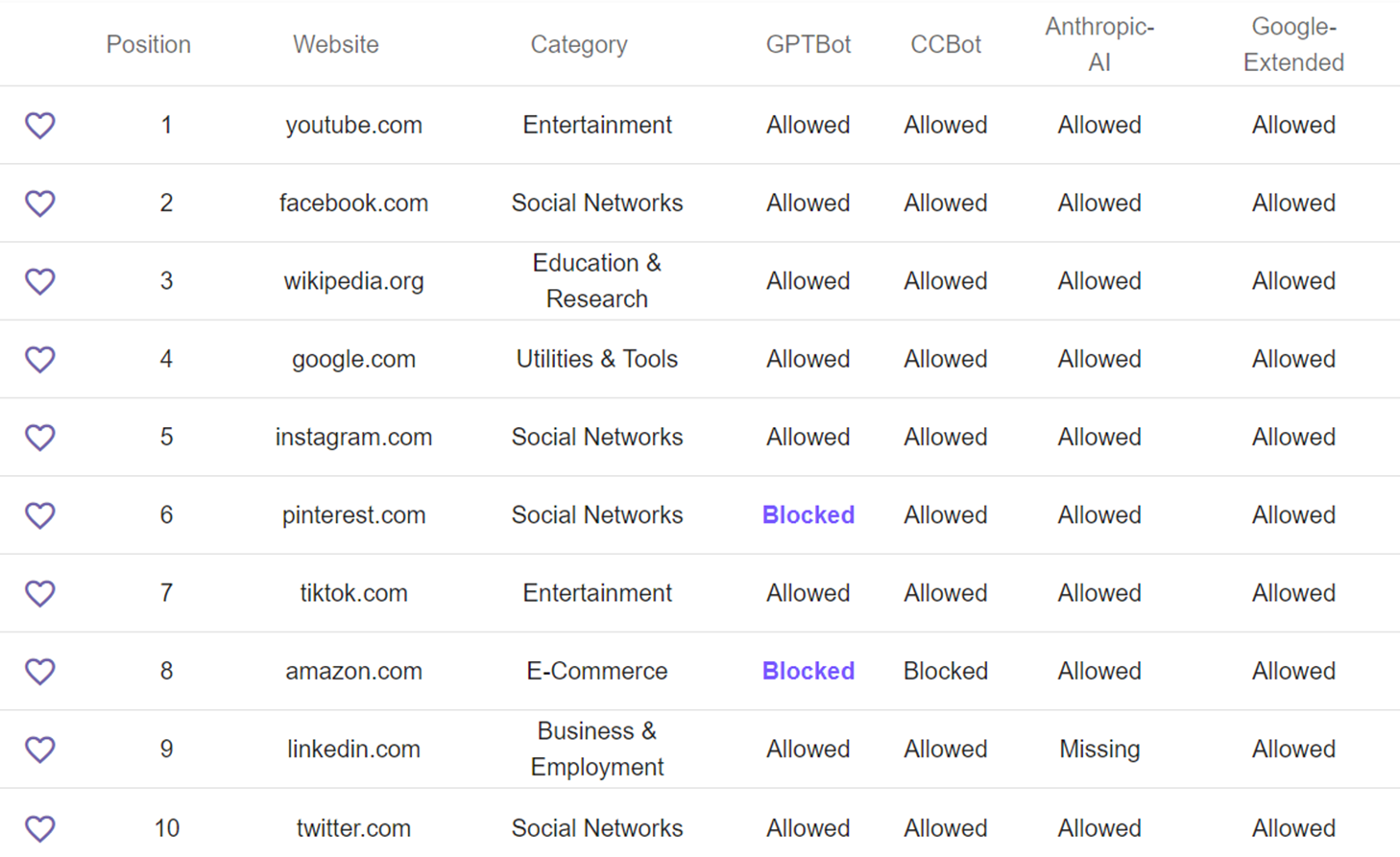

Top 10 webs

En la siguiente tabla se puede observar cuáles de las principales webs a nivel mundial están bloqueando los diferentes bots relacionados con inteligencia artificial.

Como se puede ver, Pinterest y Amazon son las únicas que han tomado acción por el momento.

Top 1.000 webs ¿Cuántas webs han bloqueado el acceso a los bots de IA?

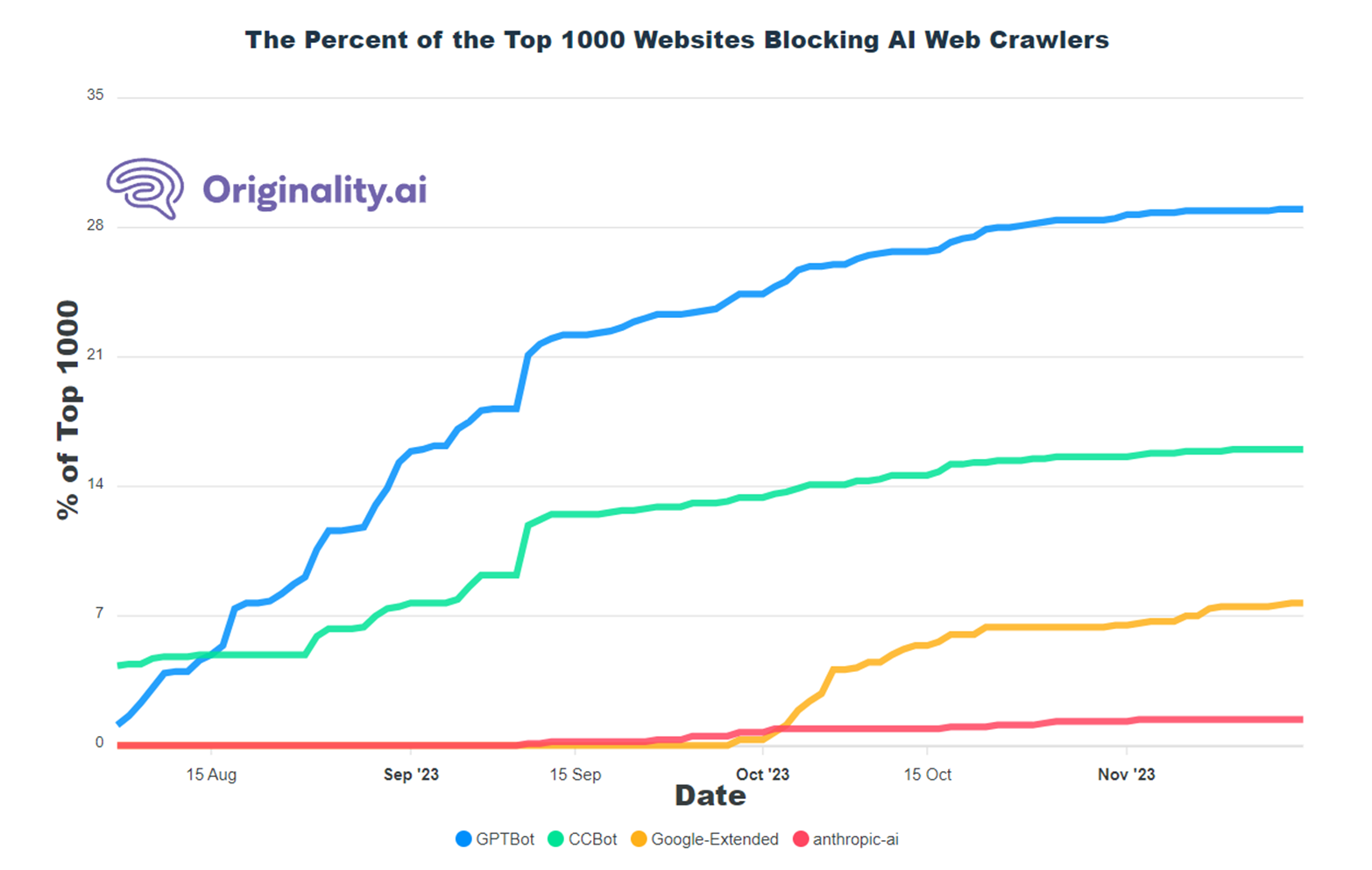

Entre los 1.000 sitios web más populares del mundo, los porcentajes de bloqueo son muy desiguales:

La gráfica muestra que de las 1.000 principales webs:

- GPTBot es el más bloqueado con un 29%,

- CCBot es el segundo con un 16%,

- Google-Extended con un 7.7%, seria el tercero

- Y en cuarta posicion anthropic-ai con un 1.4%.

Esto indica una mayor preocupación por el scraping de contenido por parte de GPTBot, mientras que anthropic-ai parece ser el menos preocupante para los administradores de sitios web.

O puede ser que sea menos conocido.

Pros y contras de bloquear los AI Bots

El uso de bots de IA como ChatGPT, Google-Extended, cohere-ai, entre otros, ha aumentado significativamente y es normal que los dueños de sitios web tengan dudas sobre si deben o no bloquearlos.

A continuación, exploraremos algunos de los beneficios y desventajas de bloquear estos bots de IA.

Beneficios de bloquear ChatGPT Bot y otros Bots de IA

· Protección del Contenido Exclusivo:

Bloquear estos bots ayuda a proteger tu contenido original de ser scrapeado y utilizado sin tu permiso, manteniendo la exclusividad y el valor de tu trabajo.

· Control sobre la Distribución de la Información:

Tienes mayor control sobre cómo y dónde se distribuye tu contenido, lo que es particularmente importante para sitios con información sensible o propietaria.

· Reducción de la Carga del Servidor:

Al evitar el acceso constante de estos bots, puedes reducir la carga en tus servidores, lo cual es crucial para sitios con recursos limitados.

· Prevención de Usos No Autorizados:

Impedir el acceso de estos bots también puede evitar usos no autorizados o no éticos de tu contenido, como la creación de modelos de IA basados en datos scrapeados sin consentimiento.

Desventajas de bloquear ChatGPT Bot y otros IA bots

· Posible Impacto en la Visibilidad y el SEO:

Bloquear ciertos bots, especialmente los relacionados con motores de búsqueda como Google, puede tener un impacto negativo en la visibilidad de tu sitio y su rendimiento en SEO.

· Limitación en la Innovación y Colaboración:

Al restringir el acceso de estos bots, puedes estar limitando oportunidades indirectas de innovación y colaboración que estos bots podrían facilitar al interactuar con tu contenido.

· Desafíos en la Implementación:

Implementar un bloqueo efectivo requiere conocimiento técnico sobre el funcionamiento de los archivos robots.txt y puede requerir actualizaciones constantes para ser efectivo.

· Posible Aislamiento en el Ecosistema Digital:

El bloqueo excesivo puede llevar a un aislamiento en el ecosistema digital, donde tu contenido queda fuera del alcance de avances tecnológicos y oportunidades emergentes.

Conclusión

Alrededor del 25.9% de las mil webs más visitadas han implementado medidas para bloquear el acceso de GPTBot.

Entre las más destacadas que recientemente han añadido restricciones se encuentra Pinterest, junto a otras importantes como Amazon y Quora.

Un número significativo de grandes editoriales y medios de noticias, como The New York Times, The Guardian y CNN, también han decidido bloquear a GPTBot.

Las primeras seis grandes webs que lideraron esta tendencia incluyen a Amazon, Quora, The New York Times, Shutterstock, Wikihow y CNN.

Por otro lado, el CCBot, a pesar de ser más antiguo que GPTBot, solo ha sido bloqueado por el 13.9% de las webs desde el 1 de agosto de 2023.

En cuanto a los intentos de bloquear a Anthropic AI, solo se han observado dos casos, siendo Reuters uno de los sitios que ha aplicado restricciones tanto a anthorpic-ai.

Podemos afirmar que la preocupación de grandes empresas y medios es creciente sobre como las empresas de IA utilizan sus contenidos como materia prima.

¿Estos recelos podrán condicionar la evolución de los modelos de IA? ¿Existen causas legales que puedan llevar a demandas por violación de propiedad intelectual?

Preguntas frecuentes

¿Debería bloquear a la IA para que no acceda a mi contenido?

La respuesta corta es que, casi con certeza, no vale la pena. Bloquear manualmente cada bot de IA es prácticamente imposible y, aunque lo logres, no hay garantía de que todos obedecerán las instrucciones de tu archivo robots.txt. Además, bloquear estos bots puede impedirte recopilar datos significativos para determinar si herramientas como Bard están beneficiando o perjudicando tu estrategia de marketing de búsqueda

¿Cómo puedo bloquear a GPTbot y otros rastreadores de OpenAI?

Puedes bloquear a GPTbot y otros rastreadores de OpenAI utilizando plugins o ajustando la configuración de tu sitio para limitar su acceso. También es posible configurar restricciones de acceso mediante el archivo robots.txt de tu sitio web.

¿Qué medidas puedo tomar para proteger mi sitio web del rastreo de GPTbot?

Puedes implementar medidas como establecer muros de pago, requerir registro de usuarios o limitar el acceso a ciertas secciones de tu sitio para evitar el rastreo no autorizado de GPTbot.

¿Puede GPTbot afectar el rendimiento de mi sitio web?

Sí, el rastreo continuo por parte de GPTbot u otros bots de OpenAI puede consumir recursos del servidor y ralentizar el rendimiento de tu sitio web, aunque es excepcional que esta situación pueda darse.

Enlaces y recursos

- https://originality.ai/ai-bot-blocking

- https://platform.openai.com/docs/plugins/bot

- https://platform.openai.com/docs/gptbot

- https://darkvisitors.com/agents/

- https://dig.watch/updates/major-websites-block-ai-crawlers-from-scraping-their-content

- https://www.govtech.com/question-of-the-day/what-publications-have-blocked-chatgpts-web-crawler

Alvaro Peña de Luna

Co-CEO and Head of SEO at iSocialWeb, an agency specializing in SEO, SEM and CRO that manages more than +350M organic visits per year and with a 100% decentralized infrastructure.

In addition to the company Virality Media, a company with its own projects with more than 150 million active monthly visits spread across different sectors and industries.

Systems Engineer by training and SEO by vocation. Tireless learner, fan of AI and dreamer of prompts.