Having a deep understanding of how crawl budget works is essential for executing effective SEO strategies. This comprehensive guide will teach you what crawl budget is, how to optimize it, and ways to leverage crawl budget for improved SEO and website visibility.

Key Takeaways and Next Steps

The major points to remember are:

- Crawl budget determines how many of your pages Google can crawl per session.

- Optimize site architecture, content, and tech for efficient high-priority crawling.

- Monitor stats in Search Console to identify issues limiting your budget.

- Fix errors, streamline site structure, and highlight your best content.

- Normally, websites under 100,000 page on your site should not be worried about crawl rate limit

Now that you have a solid understanding of bot Crawling Capacity, focus on leveraging it to surface your best assets to Google. Optimize site content and tech for quality crawling, not maximum quantity. This will translate to visibility gains in the long run.

What is Crawl Budget?

Crawl budget refers to the amount of URLs (web pages) that Googlebot can crawl from your website per session.

Simply put, it is the crawl capacity that is allocated to your website by Google. The search engine has to divide its finite indexing capacity among all websites – crawl budget manages that allotment.

Google wants to crawl and index as many pages as it can from your site within your crawling quota to better understand your content and serve relevant results. However, it has technical limitations that prevent it from crawling every page on the internet during each session.

Optimizing crawl budget revolves around efficiently managing Googlebot’s crawling and surfacing the most important pages to Google within the allocated capacity.

Why optimize your crawl budget for SEO

You may wonder – why should I worry about web crawl limit or budget for SEO?

Here’s why bot crawl limit optimization should be a priority:

- It directly impacts your pages getting indexed, which affects rankings and visibility.

- Efficient crawling improves how Google evaluates and understands your site’s content.

- It helps Google crawl new or updated content faster.

- It allows Google to recrawl important pages frequently to stay updated.

- It prevents “crawl debt” which hurts SEO rankings and traffic.

Understanding what Google can crawl from your site goes a long way in structuring an SEO strategy that surfaces your best content.

Who should be worried about crawl budget

If your website has a large number of pages, then you should be worried about crawl budget.

But, how many pages are too many?

Well, that is difficult to say, but thankfully Google give us a pretty accurate response in his guidelines.

This can be resumed into three scenarios:

- Sites with near or more than a 1 million unique pages like ecommerce websites.

- Medium or larger sites with more than 10,000 unique pages but changing content on daily basis.

- And websites with a large portion of their total URLs classified by Search Console as Discovered – currently not indexed

So, do not worry if you don’t fall into one of these categories.

How to Check Your Current Crawl Budget

To optimize your crawling budget, you must first understand the current crawling statistics of your website. The best way to do this is to go directly to the crawl stats report in your Google Search Console.

Follow these steps once you are in GSC:

Any different status will require your immediate attention.

2. Estimating Your Crawling Budget

Now that you know how to check crawling issues on your property, let’s see how you can calculate your crawling budget to improve SEO.

1.Determine the number of pages on your website.

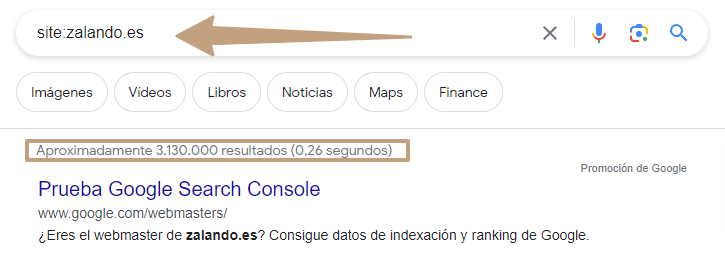

Calculate the number of indexed URLs in Google: input the following footprint into Google “site:yourdomain.com,” or check the number of pages in your XML sitemap, which can be a good starting point.

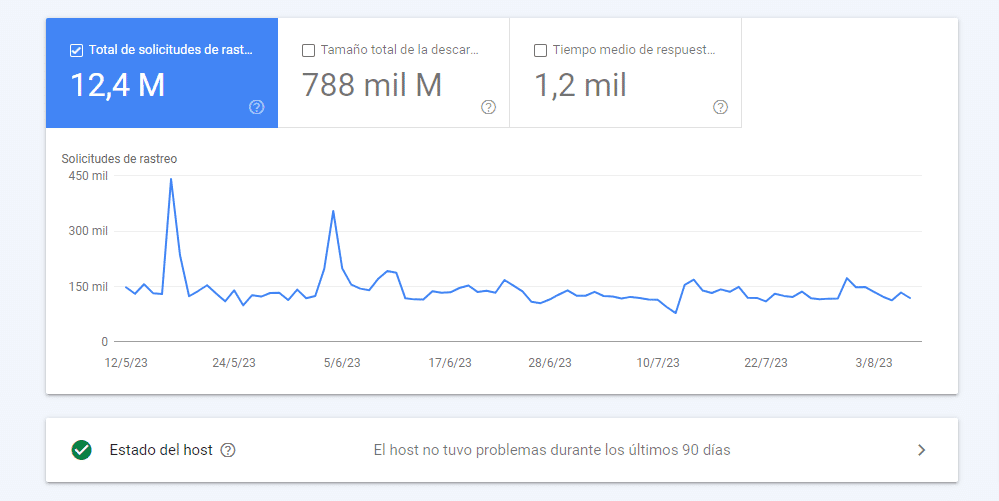

2.Within Google Search Console, click on “Settings” > “Crawling Statistics” > In the host section, click on your domain and you will arrive at the following page:

3.Calculate the average daily crawl requests. Divide the total crawl requests by 90 to get the average over the period.

Divide the number of indexed pages or your sitemap pages by the “Average Pages Crawled per Day.”

If the number is less than two digits, everything is likely okay. But if it’s high (more than 10), this could indicate a problem with your crawling budget.

If so, then proceed to perform an advanced log analysis.

7 Tips to Optimize Your Website's Crawl Budget

1. Fix crawl errors

Crawl errors like 404s and 500s use up crawl capacity. Identify and fix crawl errors to free up budget.

2. Update site architecture

Well-organized information architecture helps Google crawl efficiently. Ensure proper use of tags like H1, title, alt text.

3. Improve internal linking

Links help Google discover new pages and understand structure. Link intelligently between related content.

4. Prune unnecessary pages

Remove low-quality, redundant and thin pages to reallocate crawling capacity to core pages.

5. Disallow non-critical pages

Use robots.txt to block unimportant pages from crawling and indexing to save budget.

6. Leverage sitemaps

Sitemaps guide Google to your best pages. Prioritize pages in sitemap by importance.

7. Reduce media embedding

Non-critical media content uses crawl capacity without improving SEO. Reduce where possible.

Tips for Getting Google to Crawl Your Important Pages

You want Google to allocate more of its crawl capacity to your site’s high-priority pages. Here are some ways to facilitate that:

- Place key pages higher in site architecture – Google crawls websites in a hierarchical approach, so critical pages should be easily reachable.

- Increase internal links to key pages – More internal links signal importance of a page.

- Shorten content on key pages – Google can crawl shorter pages faster. Be concise.

- Reduce ads/media on key pages – Such non-critical content takes time to crawl.

- Submit XML sitemaps – Sitemaps help Google discover and prioritize pages.

- Produce less content – If your site generates tons of content, Google can’t keep up. Focus on quality over quantity.

Reduce architectural depth – Flatter site structure with fewer click depths helps Google crawl pages faster.

Common Crawl Budget Myths

There are some common misconceptions about how crawl budget works:

Myth: Google will crawl all pages on my site

Google does not have unlimited resources to crawl every URL from every site during every session. Crawl budget ensures efficient broad crawling.

Myth: More pages crawled equals better SEO

Crawling low-quality or irrelevant pages wastes crawl budget. It’s better to have Google crawl your core pages more frequently.

Myth: Google crawl stats define search rankings

Google wants to keep existing pages up to date too. New content does not automatically get crawl priority over old.

Myth: Google only cares about new content

Google quiere mantener las páginas existentes actualizadas también. El contenido nuevo no obtiene automáticamente prioridad de rastreo sobre el antiguo.

The key takeaway is that crawl budget reflects general indexing capability, not specific rankings. Optimize for quality crawling over maximum crawling.

Common Reasons for Sudden Crawl Budget Changes

Some common reasons Google may suddenly increase or decrease your site’s crawl budget:

- Major changes in your site’s content volume.

- Significant growth or decline in popularity and user engagement signals.

- Large fluctuations in indexing or crawl issues.

- Manual webmaster requests for more or less crawling.

- Major algorithm updates around indexing and ranking priorities.

- Seasonal traffic and query volume changes, impacting demand.

Understand that Google is constantly optimizing its crawl allocation across all sites. Don’t panic if you see budget shifts occasionally, just continue good SEO practices.

Conclusion about crawl budget optimization

In conclusion, web crawl optimization is crucial for ensuring that search engines allocate their resources effectively and crawl the most important pages on a website.

By implementing strategies such as:

- Improving site speed,

- optimizing XML sitemaps,

- and managing crawl demand,

Website owners can increase the crawlability of their site and make it easier for search engines to discover and index their content.

Additionally, by monitoring and analyzing crawling data it is possible to identify any potential issues or inefficiencies in crawling.

It is significant to note that crawl allocation is not a static value and can fluctuate depending on various factors, such as the size and popularity of the website.

Therefore, it is crucial for website owners to regularly review and optimize their crawling resurces to ensure that search engines are efficiently crawling their website and indexing their content.

Overall, crawl budget optimization should be an ongoing process that is integrated into a website’s overall SEO strategy.

FAQ

What is crawl budget optimization?

Crawl budget optimization is a process that involves managing the resources search engines allocate to crawl and index your website. It aims to maximize the efficiency and effectiveness of the crawling process by ensuring that the search engine’s crawler (such as Googlebot) spends its allocated resources on valuable pages.

Why is crawl budget optimization important for SEO success?

Spider budget optimization is important for SEO success because it helps search engines discover and index your website’s content more efficiently. By optimizing your crawl budget, you increase the likelihood of your important pages being crawled, indexed, and ranked by search engines, potentially leading to higher visibility and organic traffic.

How can I increase my crawl budget?

You can improve your crawl budget by optimizing your website’s technical SEO, reducing crawl waste, managing crawl limits, and ensuring that your site structure allows for efficient crawling.

What is Google's crawl budget?

Google’s crawl budget refers to the number of requests Googlebot crawls and the number of pages it can crawl on your site within a given time frame. It is an essential resource that search engines allocate to each website, and optimizing this budget can improve the crawl and indexing of your website.

Do I need to worry about crawl budget optimization?

It is recommended to consider the optimization of Crawling Resources as part of your SEO strategy. Especially if you have a large website with many pages.

How can I improve my crawl budget for my website?

To improve your crawl budget for your website, you can start by reviewing your website’s crawl data in Google Analytics or other SEO tools. By analyzing this data, you can identify any crawl issues or patterns. Aditionally, optimizing your website’s performance, managing robots.txt, and using structured data can also help improve your crawl budget.

What should I do if Google isn't crawling my site?

If Google isn’t crawling your site or you notice a decrease in crawl activity, there are a few steps you can take. First, check for any crawl errors or issues reported in Google Search Console. Ensure that your website’s robots.txt file is properly configured and not blocking important pages from being crawled. If the issue persists, you may need to consult with a technical SEO professional for further assistance.

Alvaro Peña de Luna

Co-CEO and Head of SEO at iSocialWeb, an agency specializing in SEO, SEM and CRO that manages more than +350M organic visits per year and with a 100% decentralized infrastructure.

In addition to the company Virality Media, a company with its own projects with more than 150 million active monthly visits spread across different sectors and industries.

Systems Engineer by training and SEO by vocation. Tireless learner, fan of AI and dreamer of prompts.