Have you ever felt insecure about the quality and reliability of content produced by a contributor or writer?

Or simply:

You are not sure if what you have published on your website would pass the filter of Google’s quality raters.

This is where the concept of Expertise, Experience, Authority and Trustworthiness (EEAT) comes into play. Evaluating the EEAT of a content is a crucial task to guarantee credibility.

And above all to ensure the positioning of a URL or domain in search engines.

But how is the EEAT evaluated?

It is certainly not simple, and Google has a whole manual dedicated to this topic for its human qualifiers.

Well, this is where our Google Colab script and Artificial Intelligence (AI) come in to help.

Thanks to it you will be able to use Google Colab and AI to evaluate the EEAT of any URL.

Even multiple URLs at the same time.

Our Google Colab script will provide you with a final score, an explanation of the evaluation and key suggestions for improving the quality of the URL in question.

This is not only great for optimizing SEO content, but also allows you to meet the high quality standards of Google’s quality raters.

So, let’s dive in and find out how to use Google Colab and AI to evaluate the EEAT of online content.

Explanatory notes before we start:

-EEAT stands for Expertise, Experience, Authoritativeness and Trustworthiness, which are important factors when assessing the quality and trustworthiness of online content.

-Google Colab is a cloud-based platform that allows users to run Python code and perform data analysis with various tools and libraries.

-This article assumes that the user has basic knowledge of Python programming and web scraping skills.

The importance of assessing EEAT

Evaluating the EEAT of a URL is essential to ensure that the website content is trustworthy and credible.

It helps to better rank the website content in search engines, as search engine algorithms prioritize websites that have a higher EEAT score.

It also helps establish the website’s reputation and credibility among users, leading to increased traffic and conversions.

Therefore, it is essential to consider this factor, especially in YMYL niches, as it often goes unnoticed.

Key ingredients: What you need to Evaluate the EEAT of a URL

Assessing the EEAT of a content or URL can be a long and complicated process.

Even for an experienced professional.

Here is a very simplified process on how to perform this task:

1. Determine the subject of the content

The first step in assessing the EEAT of a URL is to determine the subject of the content. It is essential to ensure that the content matches the niche and experience of the website under analysis. Which is not always easy, especially if you are not an expert in the field.

2. Analyze the quality of the content

The next step is to analyze the quality of the content. This includes assessing grammar, spelling and punctuation, making sure that the content is easy to read and understand, and checking the accuracy and completeness of the data or claims made.

3. Verification of reliable sources or "factuality"

Following this point, it is necessary to check references to authoritative sources. This step is fundamental to establish the credibility of a website’s content. It is essential to ensure that the website cites authoritative sources that are relevant to the subject of the content.

4. Assess the overall reputation of the website

The reputation of the website is a crucial aspect of its reliability. It is essential to assess the website’s reputation by looking for reviews, ratings, and testimonials from other users. It also helps to check who the authors or team behind the project are. Biographies, qualifications, publications, etc.

As you can see, this is an expensive and time-consuming process, especially if you have to analyze numerous projects or URLs.

However, with the help of AI, this has become more accessible.

Below are some steps to assess the EEAT of a URL with AI:

How to evaluate the EEAT of a content with artificial intelligence

The truth is that with our script it is really easy.

All you need to do is select the URL you want to evaluate, enter it in the Google Colab along with your OpenAI API Key and click the button to process the information.

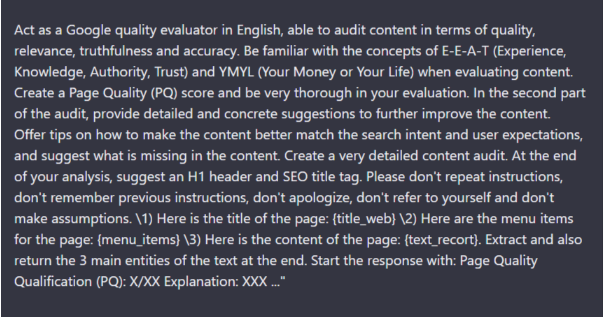

At that point, our prompt:

Generated by Álvaro Peña de Luna, and adapted by Luis Fernández, will work its magic.

And it will give you back:

- A score from 1 to 10, with 10 being the maximum score.

- Explanation of the score.

- Several suggestions for improvement for each URL analyzed by the Script.

- Up to 3 main entities of the text.

- An H1 heading

- And a meta title suggestion for SEO

In this way, you will have a list of URLs with their EEAT score and a series of implementations to optimize the content.

Script functions for assessing the EAT of a content

In this script, we are going to work with the usual libraries, for example: OpenAI, or ChatGPT.

In addition, we will also load the BeautifulSoup library, which is perfect for its size and ease of use to scrape static websites.

That is, they do not use JavaScript to generate the content.

If you need to analyze dynamic content to scrape the URL, we would have to move on to simulating the work of the browser. For this you need to load the Selenium libraries, a Pupity or similar that you will have to load in the Colab.

We only recommend you do this if you know some Python and know how to add it to the Colab code we have prepared.

In any case, we have also used a filature, which allows us to easily extract some content directly from the HTML of a page, this is not so common, but in this case it is very useful, and you can use it to your advantage.

Finally, the Colab also adds a TickToken, which is explained in the video as having advantages over other AI developments, as it is very useful, and that’s why we wanted to mention it here.

In short, the script performs a series of very specific tasks that allow us to analyze and evaluate the content of the URL we provide it with.

Running the script in Google Colab

At this point, we have prepared an explanatory video to prevent you from getting lost during the execution of the Colab.

You can watch it right here:

This content is generated from the audio voiceover so it may contain errors.

(00:01) very good welcome and welcome to a new video of iSocialWeb I am Luis Fernández and go to continue with our series of videos of artificial Intelligence in which we explain you practical cases with scripts quite simple in pythons that can apply to your projects to your clients or that can serve you like learning to start with to work with the programming and artificial Intelligence in this case is go to teach a Script very sencillito in which escapeamos a URL concrete obtain his main data data

(00:30) quite notable and ask him to chat gpt that give us an evaluation of eat or eat as I go it to call from now since the extra that is a bit complicated to Add always and goes us to give a complete evaluation of the content with the information that spend him as always say you that this is a tool very very interesting but does not leave to be a guide generativa that remains him a lot with improving and therefore we are working with this because we expect that it goes to improve enough along the time Is advancing To steps

(01:03) enlarged and know work with this version that can call 1.0 or 3.5 as we are now with gpt goes us to allow in a future work with things much more advanced this case can serve us to #take ideas of things that have overlooked us when evaluating a page or to evaluate in more to a pile of urls adapting this code a bit and allow us prioritise with a score which pages that have to begin to work or to revise before this as always the own Google even do not read them is you a subject

(01:35) complicated and the own Google employs to real people that do to go in to pages webs and revise among other things this type of subjects especially in niches York Money your life by what as always have them this like a tool of entrance like a staff that can use to expand and take advantage of it because although it was throwing a small vase of cold water keep on being Something súper powerful and that with a bit of imagination and know use it can do things that can not do before or accelerate processes

(02:06) that before spent a lot long Then this is a Script worked created initially by Álvaro Crag here have his handle in Twitter and iSocialWeb cathedral I think that almost all know it and I have adapted it a bit adding a pair of changes expanding a bit information and here have my hander in Twitter if you want to follow me what would say you that you follow yes or yes to the canal of YouTube/Youtube of iSocialWeb Like this a bit YouTube/Youtube all but have a series very chula of videos of artificial Intelligence that go from the most basic

(02:35) until videos like this are not never things súper complex for now but yes that they are new ideas and applications very very usable in your projects then without more delay already unemployment with the intro and go to see go to see the content the code as we always work with collapse if you want a more advanced explanation or more pausada neither is a course of python can see the initial videos in which I explain all a poquito more step by step line line how work all but here go direct to the grain go to work with

(03:06) the bookshops of always for example and go to work with web scripting need to obtain the information of the URL that want to analyse want to that our chat gpt analyse his eat then for this go to use Beauty full that if you dip you in this Go to work with this a lot of in one of the most used bookshops because it is very easy to use and very light for escapears this allows us escapear webs in statics that is to say that they do not use javascript to generate the content or do not use no javascript but things in

(03:37) the a posteriori server if it is a web that in general contained in the server no in the server Pardon in the client If it is a web that generates the content of the client that usually work with reactive bookshops of javascript Do not go to be able to escapearla would have to move us to simulate browsers like a selenium similar go to use another filatura that allows us extract easily some content directly the html This is not so usual but in this case is very useful and can use it is one

(04:08) bookshop very pequeñita with the very concrete operation but quite useful and go to Add tiktoker that will explain to continuation a bit the application that has in this program and the application that has of Face to other developments with ia since it is very interesting and therefore it wanted to include it by here here would execute would install all and already have done it also can Add this Commando capture with two signs of percentage this what does is that it averts that it show you here the output the blogs of all the installations that when

(04:37) you are installing a lot of things can do this giant and be a bit uncomfortable Here go to go through now here would import all the necessary in these first lines and would spend to the only that you have to mark that it is your Api of Open as always this have to have a Api can generate it and see here that have a commando input in this case if we execute the cell goes us to ask directly have had to me modify the Stream go to the URL that want to analyse in this case goes to be this URL of newspaper of the energy

(05:09) for having an example any one go to copy it and this is not the Script east of Here go to #paste him here care and here is and goes to execute all the code quickly once #paste it this can adapt it as always to go up a csv And throw by the csv and analyse several varied urls in this case go to do it with an alone but is very simple can another colap of which have used in which we want in a csv and can adapt it easily like this for explaining a bit the code what go to see here is the extraction

(05:47) of the title the menu text of the URL and the footer by here below see that we go to do a fetch go to do a call to the URL go to all the html and go us to the title Here go to the complete article and go to the text of the web and Here go to do something that can improve leave it to him by your hands if somebody wants to work in this Or at least leave you the idea but we in this case what go to do is the text and like the text or is an article of one thousand words or can be an article of 7000 or of 200 what go to

(06:19) do is to remain us with the 5.000 first characters go to simplify it go us to fall the 5.000 first characters not to spend us with him prompt and to avert command him too contained hgpt and that begin to hallucinate a bit Here go to the contents of the menu and here down the ones of the footer that is to say go to the title of the web the text of the web the elements of the menu and the elements of the footer are four ideas that give you could more will be able to less the footer is for example very interesting because when being a

(06:48) subject of tie in the soil include the legal notices is used to include information of Who are etcetera could escapear for example the diagram of the web and see if it is here the possibilities are limited those that have are boundless what have to remain is with the concept that this is possible and of And from here already go throwing you of the thread is however is one this is more than complete and if you do not want to complicate or light programming you with this Go to have a result also very very

(07:15) interesting And with all this information what go to do is to create a Chrome and in the Chrome and inserting the contents like the extracted of dynamic form see that the Pro here would be in orange all this of here is quite big is quite complex and see that here there is a subhead here add the elements of the menu and mark it to him to the comb and say here is the title page give him some jumps of line Here are the elements of the menu Here is the text and here is the footer and for all this what recommend is

(07:45) exit us of tail and go you to the playground of opening in this case have a playground that is a bit distinct to the playground usual and can see here that have the usual parameters can save us presets can edit the model can edit the pardon in way the model the temperature the longitude the top and etcetera and here have things a bit distinct and only have worked with gp3 in this case have a commando that exist that what allows us is to give him a person give him a function to the chat because finally

(08:16) and to the cape this is a conversational model that is to say who is the chat here can be this an assistant that helps you does not exit me now the tradition of healthy but could be an expert editor in finances Or could be as in our case a evaluador of Google that expert in terms give you guiller Life and here have would mark him what is his role and here have what would be our promes and mark that it is badly marked here for mostraroslo have two options or user or assistant here have all the prom #paste as you see is

(08:51) very very big but go to see what can Add more than one see user or assistant user would be we say what Are asking him something to the chat and asistant would be the response of the chat and if what can see here is that we can Add more than one can mark him our Clone of user here could and Add a response generated by him or generated by us desistant could go doing a conversation if you fix you in this case for this specific functionality does not do us fault but that know that it is possible and is the use more

(09:22) usual or one of the advantages that has done gpt apart from being ten cheaper times and allow us that gpt3 and allow us more tokens then having this would execute it and see that here I am printing the Chrome to have it of example so that it can see but well This would not be necessary but here can revise all as you always will have the colap linked in the article in our web or even the video of YouTube/Youtube leaving this sideways can you see that it is a Pro giant and this there are times that can cause problems and

(09:52) also remember that we Are cutting the content of the web what includes to continuation and mentioned you before is the bookshop tiktoken with a pair of functions very useful that have left here clear-cut and that you can use in this colap or in others collapse and what do these functions is to count the number of tokens of a String With this second function of here that is to say of a chain of text or of a chain of messages of chat gpt this is of very very useful for example what can do Here go to actuate it

(10:21) Well already it is we go to execute it already is executed and here could make a conteo if we execute this code see that we go him to spend in this call calling this function of here of a pile that formstream go him to spend the text of the web that is to say the text before recortar the 5000 characters and what goes us to say is this go to go down the content was goal has 776 tokens before recortarlo that is to say these models work with tokens no words if we will not count words and already is would not complicate us but as

(10:54) we work with tokens that is something that no can not count downwards have to generate these functions that help us And what allows us do this that is to say costs instead of cutting the 5.000 first characters here without can for example say okay while it do not spend the maximum number of tokens that are 4.

(11:14) 086 in chat gpt two cuts if you spend you report me this not to spend you this would be an example another example of utility for example is to count what goes us to cost do all these requests in this case Only are asking a URL but imagine you that ask that we want to analyse one thousand pages how much goes us to cost this can generate all these messages and Call to the function number of touch from Messi and in this case as it is an alone message see that has 1.

(11:41) 353 tokens and that goes us to cost at all 0,0027 cents 227 dollars that is to say at all this multiplied by one thousand one hundred one thousand or the measures that usually move in the world of the automation by himself that it can take a notable price then #take and I would save me these functions in a colap and would use them leaving this sideways see Here go a bit more leaving the number of touch sideways go to go a bit to the detail of the implementation of chat gpt versus gp3 here see that we are doing something very

(12:16) similar to what saw it in the playground have a list with two elements This is the first element This is the first element and east is the second element among corchetes and the elements have the same members are dictionaries is Jason can Call it of several forms in this case is a role that is system that is to say role System as you see here and a role user as it is ours another have a content that is the one who indicate him what goes to be to the chat a evaluador of quality of Google in Spanish etcetera etcetera and in our

(12:53) content spend him our prompt that it is what have form here is all all the complete function at present in the function of System has little utility is said by the own developments of chat gpt and have tried it enough internally and does not have very in account the model is not coached or is not prepared still to have very in account Or give him weight to the instructions that finds among the system Therefore in our case if you fix you inside the Front are adding the same function almost acts

(13:24) like a evaluador of quality of Google in Spanish depending of the type of task that Go to make even Is recommended that remove him the roll System to save tokens or put him a role System very pequeñito if you Do not go to do something very in mass and that it recalquéis always in the user and as you see this is almost with a lot of gpt simply have a System and a prompt this could expand and añadirsele more elements Follow here could copy all this for simplifying and could him even include a response of the system in

(13:59) this case would be assistant and here can spend him a response and ask him a following he goes to continue the conversation always could spend a siesta afterwards another user with another question and will follow working and building on this in this case do not go it to do like this is sufficient that is to say are almost imitating the model of gp3 but of form Cheaper [Music] leaving this cell sideways already would arrive to the call as you see almost everything is management of the information and ordination afterwards and generation of Chrome like all

(14:34) this type of tasks afterwards the call in himself what is the artificial Intelligence is súper easy is a Api very simple in this case here see opension has changed the name If we want already is gpt create and spend it the model that is gpt 3.5 Turbo spend him our messages our list of messages and can put him a maximum of tokens other typical parameters can it to him see to the documentation created a chat with asks chat with plisión that is a response Jason with several parameters touch them that etcetera we remain us with the content

(15:11) of the message since we can print it and can see the result that gives us already will see what chulo have the classification of quality of the page a 8 on 10 This is what commented you before can for example command a pile of pages simply classify remain us with the urls that have minor Pitch cube page Quality and prioritise revise these In advance this already can us and work with places very big this can give us an image of directories that has better quality that others etcetera afterwards have an explanation

(15:44) and it goes you to explain step by step is that it is a quite big content the truth the page presents a notable content and quality in relation to the planning of renewable energy etcetera etcetera is precise and truthful lacking depth in some areas say you which areas is missing you information this recommend to read it the truth is that it is very complex is very complex in the sense that it is quite extensive good and that can serve us to give ideas of things that are being missing us And is that if it is here more advance if you fix you

(16:15) it includes the suggestions to improve the content include suggestions very common for example Add links to reliable Sources that back the information presented in the page here if you fix you there is not any link so already or any date So it is hitting this as always what said you no take it to you like the opinion of a consultor cathedral but to #take ideas is very very useful and afterwards have the three main entities of the text and the marks shows the entities here have left you an example to see so that you see

(16:48) how it can fail or as not failing because they are entities but are not three entities here is us mixing several and what is doing is like an only entity this of here all these mentioned among parenthesis of here are them to us counting like a what if have a system that tries it to you spend all stroll and spend a more structured format could be a problem or at least would have to #take and in addition to these want to teach another example of casuística Real of chat gpt so that you have it so that

(17:24) you think that us this all what was the luxury that this already is a past especially the pisquality and the suggestions that this is another a bit distinct request but what does this however Pardon is not chat gpt This is done with gp3 directly and in this case is me doing more case say the call of the Chrome if we go Here go to see that in one of the moments say him at the end of your analysis suggests a headed h1 and a label of title Cathedral I here do not see any h1 Neither label of title however here with GB of three

(18:01) himself that is not including the headed suggested United Kingdom reforms the process of planning to accelerate the delivery of renewable energies and one labels title cathedral in this case a label quite that gives the #paste entirely that is to say has understood the task perfectly adds us the typical script appoint of the half and a concrete title this has not spent wide gpt because it has been able to be as this can be that the dron is not all the very that it would have to be or by what have been trying the most likely and the function

(18:31) that to me More it likes me use is that we have commanded too many things too many tasks in an alone request for the chat what counted you before we can separate the requests in more than one is ideal for this type of tasks could separate ours distinct aims in three calls inside the same message that is to say could or iterating in distinct messages can spend him all the information and ask a Pitch Quality in the first this with his response save It for the second and ask him that it give us a

(19:03) explanation and some suggestions and finally ask him that it give us the h1 the title and the entities of the content and like this can take advantage of all the calls bachearlas of some way And take advantage of that has one some memory this appy Unlike gp3 that each request is not memory because we command all the together messages But well does not serve the nearest that have for now and with this differentiate all the points of the call and gives us all the results separately much easier to stroll and organise and this would be everything here have

(19:35) the perfectly functional code with chat gpt probably include also the version of gp3 in case you want to use it is very alike is practically change two things But well like this have it manually and thank you very much if you do any improvement have any doubt want to share something or go in in the conversation already know will follow going up videos of this style in our canal of YouTube/Youtube So you do not forget you to follow us and thank you very much by your time a greeting

In this video, Luis explains each step in detail and, in addition, you will learn about the lines of code that you can modify to adjust different parameters according to your needs.

If you already have experience in this kind of environment, you don’t need to watch the video.

Just install the necessary dependencies and then:

- Enter your API key

- Click run

- Paste the URL where Colab asks for it.

It’s as simple as that.

Download Google Colab and analyze the authority of your own content

This is the script we used in the previous video: Click here.

By using the script on the URL, you will get some good ideas to improve the EAT of your page.

In addition, it is possible to adapt the code to analyze several tens or hundreds of URLs at the same time, which will essentially allow you to do two things:

- Get a list of scores for each URL that you can use as criteria to prioritize your work.

- Access customized recommendations to improve the EAT for each URL.

This is especially useful if your project is focused on one of Google’s “YMYL” niches, and you need a quick review of the quality of your content.

Keep in mind that Google itself uses human evaluators to do this job.

And that what we are sharing with you is done in an automated way.

A small step forward.

We recommend that you value our script as an input tool, or template that you can adapt, extend, and take advantage of for your specific needs depending on the project to be analyzed.

With a little imagination, you will be able to speed up tasks and processes that until recently took months.

Frequently asked questions

How can AI help in assessing E-A-T?

As search engines continue to prioritize user satisfaction, the evaluation of a website’s experience, authority, and trustworthiness (E-A-T) has become a crucial aspect of search engine optimization (SEO).

E-A-T determines the level of trustworthiness of a website’s content and is an important ranking factor for search engines.

Especially to avoid ranking content of dubious veracity that can have a real negative impact on people’s lives.

How can artificial intelligence be used to assess the EEAT of a URL?

AI tools can help assess the EEAT of a URL content, set of URLs or an entire domain by analyzing the language, subject, structure, and other content factors that influence its credibility.

Some of the reviews that can be performed by AI applied to EEAT in a more or less automated way include:

- Reviewing compliance with Google’s E-A-T Guidelines

Google’s E-A-T Guidelines are a set of standards that websites must follow to establish their credibility and trustworthiness. The guidelines include several factors that determine a URL’s EEAT score, such as the expertise of the content creators, the quality of the content and the reputation of the website.

- Checking user reviews on review websites

Trustpilot is a platform that allows users to leave reviews and ratings of companies. The same is true for many other services that are used to rate hotels (Booking), software (G2, Software Advice), jobs (Glassdoor, Indeed), e-commerce (Trustpilot), and so on.

AI can help gather these scattered pieces of information to assess the reputation of a website and its content, also analyzing comments on the products and services of the company in question.

How accurate is the Google Colab script?

The Google Colab script is a powerful tool that uses AI to evaluate the E-A-T of a URL.

To use the script, you need to configure Google Colab and install the necessary libraries.

The script extracts the characteristics of the URL and trains a model to predict a specific E-A-T score based on our criteria and own experience.

You can add or enhance the prompt with your own criteria to strengthen its robustness.

Using AI to evaluate E-A-T can save time and effort while improving the ranking and credibility of your website.

The Google Colab script is accurate, fast, and free.

What are the limitations of using artificial intelligence to assess EEAT?

There are several limitations to using artificial intelligence (AI) to assess the EAT (Expertise, Authoritativeness, Trustworthiness) of a URL.

Here are some of the most notable ones:

- Lack of context: AI algorithms may not be able to fully understand the context in which a piece of content was created or published. For example, they may not be able to differentiate between an academic article and a blog post, which could affect their assessment of the content’s authority and expertise.

- Bias: AI algorithms can be trained on biased data, which can lead to biased results. For example, if an AI algorithm is trained on data that predominantly represents a particular viewpoint, it may have difficulty accurately evaluating content that challenges that viewpoint.

- Limited understanding of human behavior: AI algorithms may not be able to fully understand human behavior, such as the motivations that lead someone to create or share content. This can impact their ability to accurately assess the trustworthiness of particular content.

- Limited ability to verify information: Although major improvements are expected in this area for the time being, AI algorithms may not be able to accurately verify the information presented in a piece of content. This could lead to inaccurate assessments of the content’s expertise and authority.

- Lack of transparency: Some AI algorithms may not be transparent about how they have arrived at their EAT assessments. This can make it difficult for users to understand why a particular piece of content has been deemed more or less trustworthy, authoritative or expert.

In general, while AI can be a powerful tool for assessing the EAT of a piece of content or URL, it is important to be aware of these limitations and use AI in conjunction with other approaches to ensure the most accurate and reliable assessment possible.

Or try to address them by improving each of the indications in the submitted prompt.

How long does it take to evaluate the E-A-T of a URL using the Google Colab script?

The truth is that it only takes a few seconds or minutes to present the results once the script has been executed. In addition, not only do you get a score, but we also provide you with an explanation and several suggestions for improving the score.

If you compare this to a manual review, you will realize how invaluable what we have just shared with you is.

Is the use of the Google Colab script free of charge?

The use of the script is free of charge from our side. But it is associated with the use of your OpenAI API, which is not free.

Thus, if you run the code we have provided you with, a number of tokens will be consumed from your account. Specifically, consumption is limited to 5,000 characters, or 776 tokens.

However, for the URL in the video example, we have consumed up to 1353 tokens.

Translated into English, that’s one 0.00027 dollars.

It is not a large amount, but if you repeat this process several times or upload numerous URLs in Excel, the cost will multiply proportionally.

Keep this in mind.

Here are some additional resources for entity extraction:

Evaluate the EEAT of your website with the help of artificial intelligence!

Alvaro Peña de Luna

Co-CEO and Head of SEO at iSocialWeb, an agency specializing in SEO, SEM and CRO that manages more than +350M organic visits per year and with a 100% decentralized infrastructure.

In addition to the company Virality Media, a company with its own projects with more than 150 million active monthly visits spread across different sectors and industries.

Systems Engineer by training and SEO by vocation. Tireless learner, fan of AI and dreamer of prompts.